Introduction

The world of artificial intelligence (AI) is changing fast. It’s moving beyond chatbots that simply respond to prompts. Today, agentic systems powered by a large language model (LLM) can take action—from booking flights to processing refunds and managing inventory at scale. Platforms like OpenAI and Google AI are accelerating this shift toward autonomous execution.

This evolution of AI automation can significantly improve productivity and operational efficiency. However, it also introduces new risks. Enterprises must ensure these agentic AI systems remain aligned, safe, and controllable. Before deploying such solutions, it’s essential to establish strong AI guardrails and operational boundaries. Resources from IBM Research and MIT Technology Review highlight why governance is critical as autonomy increases.

Understanding Agentic RAG and Its Evolution Beyond Standard RAG

Agentic Retrieval-Augmented Generation (RAG) represents a major advancement over traditional RAG architectures. Standard RAG retrieves relevant documents and generates answers.

Agentic RAG, however, determines when to retrieve information, which tools or APIs to call, and what actions to take afterward. This evolution transforms AI from a passive information retriever into an autonomous decision-making assistant capable of real-world execution. As explained in enterprise AI guides from Microsoft Azure AI, this shift enables deeper workflow automation but also demands tighter oversight.Key Differences Between Standard Retrieval-Augmented Generation and Agentic RAG

The main way they differ is in what they do and why they do it. A standard RAG system is meant to answer questions. It grabs some text that fits the question, then uses a large language model, or llm, to read that text and make a text answer. A standard RAG finishes its work after it gives you the information.

Agentic RAG is made to finish tasks. It uses retrieval and an llm, but not only to get facts. It also helps to make choices. For example, Agentic RAG may call an outside API, search a database, or send an email. This system has many steps. It will route, check for validation, and run tools. That is why Agentic RAG is a multi-stage workflow.

This difference is important for safety. Guardrails for standard RAG aim to stop harmful content from showing up in the input or output. When it comes to agentic systems, you need extra rules. These make sure the ai does not take some actions, like using certain tools or needing a person to check before doing something sensitive.

According to AWS Machine Learning, these systems must be designed with layered security controls from the start.

How Agentic RAG Enables Task Automation in Enterprise Use Cases

In the enterprise world, agentic RAG makes complex work easy by turning these tasks into automatic steps. These systems do not just give out information—they use it to get things done. This helps the company work faster. Think about when a customer service agent must handle a refund. A smart agentic RAG will not just read you what the refund policy is, but will go ahead and make the refund happen from start to end for you.

This kind of automation is reached when the AI gets the tools it needs and knows how to use them. A case study from Avi Medical showed that their agentic system could do 81% of the work for scheduling appointments. This led to big cost savings. The reason this worked is because the agent could work right with the scheduling systems.

Here are some ways that this works in practice:

- Compliance Chatbots: A chatbot for financial services can give fast answers to simple rate queries by getting data straight from the database. For harder questions about rules and laws, it uses document retrieval to find answers.

- Inventory Management: An agent can watch stock levels, use machine learning to see if there will be shortages, and place new orders with suppliers as needed.

- Customer Support: The system can check a customer’s request using the company’s policy. It will then start the return process on its own, without the need for help from staff.

For more enterprise automation insights, see our related guide on AI workflow automation

The Rising Stakes: Why Enterprises Demand Stronger Guardrails

When an AI system can spend money or make changes to customer records, the risk goes up a lot compared to a simple chatbot. A company has every right to be worried about the wrong actions from ai. These can lead to a loss of money, legal trouble, or hurt their name. One mistake from an ai that works alone can cause big issues that security teams and top managers want to stop.

This is why many businesses want stronger protections than what normal safety filters from llm makers give. The basic filters are not made to deal with what happens in your business every day. They also do not always stop an agent from using a tool the wrong way. In a real work setting, people need more control and want to feel sure things work well.Research from Harvard Business Review emphasizes that AI governance is no longer optional—it’s a business requirement.

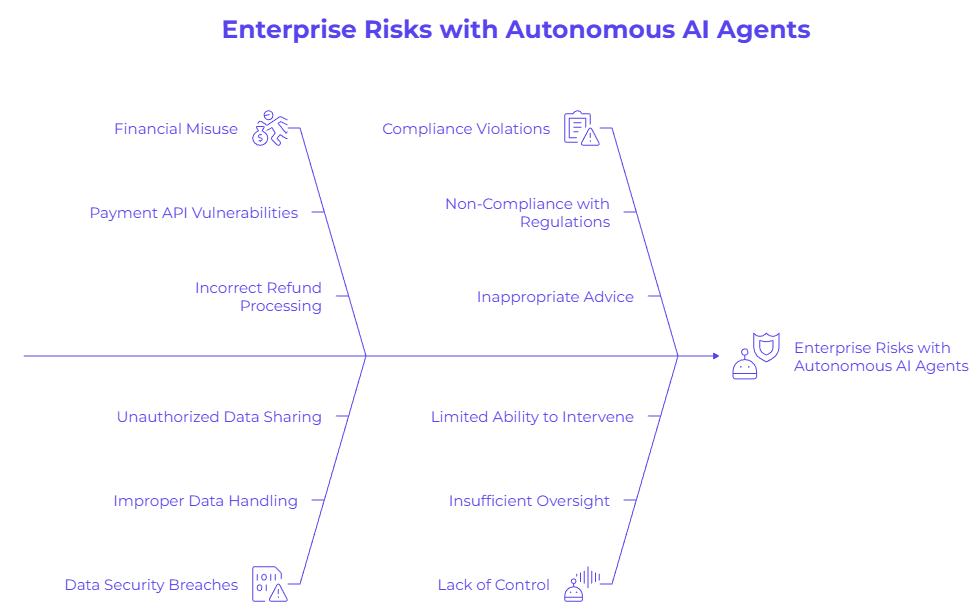

Enterprise-Level Concerns with Autonomous AI Agents

The use of agentic systems in a company brings up new worries. These systems can make work faster and easier. But the risk is high if an AI agent chooses to do something wrong or if it acts without someone saying yes first. Teams feel uneasy about what could go wrong if an AI does things without close watch. Without proper oversight, agents may misinterpret intent, misuse API access, or expose sensitive data—issues frequently highlighted in cybersecurity discussions by Cloudflare.

These worries are not just about the tool making the wrong text. They also affect how companies run every day. If an agent is given too much control, it might read a request the wrong way and do something harmful in real life. For example, it may give back money to a wrong person, remove important data, or share wrong facts with a client. This could make it very hard for companies to follow the rules.

Here are some of the main things big companies worry about:

- Financial Misuse: If an agent has access to payment APIs, someone could trick it. It may send money without being allowed to or give refunds the wrong way.

- Data Security Breaches: If the agent is not set up the right way, it could show sensitive customer data or share inside documents.

- Compliance Violations: In fields like healthcare or finance, the agent may break laws, such as HIPAA or TILA. It can do this by giving advice that is not allowed or handling data the wrong way.

The Importance of Reliability, Compliance, and Control in Production Environments

In a demo, an AI system can make some mistakes and that is usually fine. But in live production, there cannot be failures. Reliability, compliance, and control are very important for success. A lot of ai projects do not work because they leave the safe lab and move into the real world without getting ready for what might happen there.

You have to plan for things to go wrong right from the start. If your system has four steps, and each step works 95% of the time, the whole system will only work about 81% of the time. That means there is a one in five chance that the system will not work. For most businesses, this is not good enough. It is very important to set clear metrics for things like how fast the system works, how right it is, and how much each question costs. You should do this before you build anything.

Control is about having the final say. You need to be able to watch what your agent does. You should also have the power to change its choices and make sure every result fits your brand. It should also follow the rules you need to meet. Without this kind of control, you let a system you cannot predict handle important parts of your business.

Core Risks in Agentic RAG Workflows

Two of the most critical risks in agentic AI systems are infinite execution loops and unauthorized API actions. NVIDIA’s enterprise AI research at NVIDIA AI highlights how unchecked autonomy can quickly escalate cloud costs and operational damage.When you let AI take action, you can open up new and serious risks. Without the right rules, agentic RAG systems come with big blind spots and weak spots. These problems can lead to bad things. They are not just small mistakes. They are the main failure points that can make your system go out of control.

The two biggest dangers with an llm are loops that never end and the chance it might do things it should not through API access without permission. These risks show that you cannot just connect an llm to your tools and feel safe. You need to build a secure llm workflow. This workflow must be ready for things to go wrong and stop these problems before they get out of hand. Let’s look at what could go wrong.

Infinite Loops and the Danger of Unchecked Autonomy

One of the biggest and most expensive problems in agentic systems is the never-ending loop. This is when the agent gets stuck and keeps doing the same thing. It tries, fails, and then tries again to finish a step in its workflow. For example, let’s say an agent is made to keep trying a job until it works. A mistake that doesn’t go away can make it do the same API call again and again.

When you give llm too much freedom, it can lead to real problems. Each time the llm tries something, it might need to make queries from a database and do other steps, and that all costs money. If an agent gets stuck and keeps running over a weekend, you could lose thousands of dollars before you even know about it. This kind of thing is not just possible. It often happens when people use llm in their day-to-day work.

To stop endless loops, the agent needs to have memory that lets it keep track of what it did before and why things did not work out. The agent should not just try the same thing again without thinking. It can try a new way. If nothing works, the agent can ask a person for help. This makes a big problem into something you can handle.

Unauthorized API Access and Potential for Rogue Actions

Maybe the biggest worry is when the agent does things it is not supposed to do. This can happen if the agent gets into an API without the right permission. Think about a customer support agent. It is made to only check order details. But if there is a weak spot and the agent gets into the “process refund” API, someone could trick it to give fake refunds.

People who want to break through weak security keep finding new ways to do it. One well-known way is the “Chain-of-Jailbreak” attack. Here, someone moves the model toward doing something unsafe, but does this little by little. It uses several steps, so basic filters that check each step by itself do not work well. This kind of attack can fool an agent into running a function that it was not meant to use at all.

These weaknesses are the things that make security teams lose sleep at night. An AI could remove data, send bad emails, or move money without saying yes first. This is a big risk. To keep agentic systems safe, you have to close off API access. You also need to make sure the agent can only do things inside its clear job.Shortcomings of Standard LLM Guardrails in Agentic RAG

You may feel that the built-in safety tools of a large language model, or llm, will keep your agentic system safe. But the truth is, these normal guardrails do not do enough for the tough parts of agentic rag. Most of the time, they are made to stop harmful text when it goes in or when it comes out. It works like having someone watch the door at the start and finish of the talk.

But the main risks with agentic systems are in the things the agent does. Standard input guardrails and validation do not watch how tools are used, what API calls are made, or how the LLM thinks through problems. This leads to blind spots that people could use to cause harm.

Studies referenced by Stanford AI Lab show how easily static rules can be bypassed through prompt manipulation.

Limitations of Traditional Guardrail Approaches

Most traditional guardrail methods are too general for what a business really needs. They look mainly at broad risks like violent threats or adult content. But these guardrail systems do not have the context to spot risks unique to your business. A reply could seem safe under common rules, but it might go against your company’s brand or break an industry rule.

These guardrails are easy to get around. Studies show that if you just change how you ask something, these models might give the harmful advice they first said no to. People often say they need the advice “for a friend” or “for a presentation” and this helps dodge the safety filters. This shows that input guardrails have big blind spots.

Here are some key limitations:

- Lack of Context: They do not get what makes your brand unique. They also do not know your legal or compliance needs.

- Easily Bypassed: People who want to get past these can use tricks like saying things in a new way or asking several small things to not get caught.

- Focus on Text, Not Action: The tools check text only. They do not stop or control what an agent does if it has extra tools.

Why Static Rules Fail With Dynamic Agent Workflows

Agentic systems do not work in a strict way like old types of software. The way they do things is always changing. This means each agent can take a different path each time, and it depends on what the llm decides. You cannot tell exactly what it will do before it runs. That is why you cannot keep it safe with just a simple list of fixed rules.

A rule such as “block all mentions of ‘refund'” is too simple. This may help stop fraud, but it also blocks the agent from talking about your refund policy in a clear and needed way. On the other hand, if you let the agent say anything, there will be vulnerabilities that people can use in a bad way. Because the agent can think and come up with new replies, it can get around any fixed list of rule or block you set.

Agentic systems are strong because they can change how they work. But this same thing also makes them hard to keep safe. If you use rules that never change, they will not be able to deal with all the ways an agentic system can see things or decide what to do. You need to use a smarter and more flexible way to keep things safe. This way can watch how the agentic system acts at the moment and keep checking if it is safe.

Building Advanced “Fences”: Essential Guardrails for Agentic RAG

To use agentic automation safely, you need to go past basic safety filters and set up strong “fences” around your AI. These advanced guardrails fit the special risks that come with agentic systems. They help you stay in control and feel good about using them on real tasks. For security teams, this means you will need to build a defense with more than just one layer.

These fences go beyond checking for bad words. They set rules for what the agent can do. This means making clear limits on how the agent uses tools. It also means putting strong controls on money and resources. These steps help stop wrong actions and keep your ai running in a safe and steady way. Best practices outlined by Gartner stress the importance of layered defense strategies in autonomous AI.

Action Guardrails: Restricting Tool Usage and Access

Action guardrails are very important for keeping agentic systems safe. The idea is clear and simple. You should always set rules that tell which tools the agent can use. Make sure to say what the agent is allowed to do with these tools. This is not just about watching text anymore. It is about having control over what an agent can or cannot do.

For example, you can let an agent use a tool to read a customer’s order history, but not to change or delete it. For actions that have higher risk, like handling a payment, you can set up a stop where a person needs to approve it first. The agent does most of the work, like figuring out how much needs to be refunded and checking the rules. Then, it stops and waits until a person clicks “Approve” before doing anything else.

This way, you get both the benefits of automation and the safety that comes with people checking the process. It is fast and also keeps things safe. Here is how you can set up action guardrails:

- Role-Based Access: You can give roles to agents. These roles can limit them to certain tools. This works like what you do for human workers.

- Human-in-the-Loop (HITL): A person must say yes to any action that is risky, can’t be changed, or has money involved.

- Tool Input Validation: Before an agent uses any tool, check the inputs through validation. This helps make sure they are safe and in the right format.

Implementing Budget and Rate Limits to Prevent Resource Drain

Unchecked automation can soon use up a lot of your resources. An agent may get stuck in a loop or deal with too many hard queries at once. This can cause a big rise in how many LLM tokens and API calls you use. To avoid these problems, you must use strong budget and rate limits in your guardrail plan. This helps control automation, keep track of your LLM, manage tokens, and handle queries in a safe way.

These limits work like a safety net for your money. You can set the most you want to pay for each query. You can also set a daily budget for one agent, or choose how often it can use an API within a set time. If the agent goes over any of these limits, it will stop on its own. Your team will also get an alert so they can check what’s going on.

This stops a small bug from becoming a big money problem. Watching these metrics is not only about saving money. It also shows when something in your workflow is not working as it should.

Guardrail Type | Description |

|---|---|

Cost Per Query |

Sets a maximum dollar amount that can be spent to resolve a single user query. |

Daily Budget |

Caps the total cost an agent can incur in a 24-hour period. |

API Rate Limit |

Restricts the number of calls an agent can make to a specific tool (e.g., 100 calls per minute). |

Token Limit |

Limits the number of tokens an agent can process or generate in a single turn to control cost and latency. |

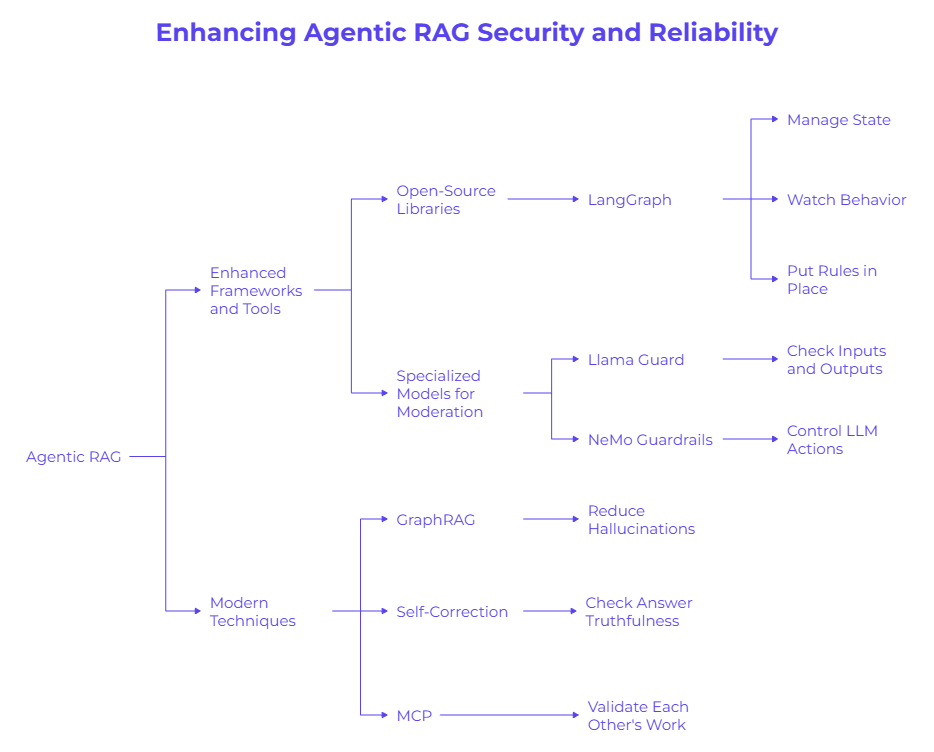

Enhanced Frameworks and Tools for Agentic RAG Security

You do not have to make all these strong rules by yourself. Now, there is a growing set of frameworks and tools for agentic AI. These help both developers and security teams. You can use them to build safe and reliable agentic AI systems. According to Arize AI, observability and tracing are now core requirements—not optional extras.

Emerging techniques such as GraphRAG and multi-agent collaboration (MCP) further reduce hallucinations and improve reliability by validating decisions across agents.

Role of Specialized Tools Like Llama Guard and NeMo

Specialized tools are important in making your agentic workflow more safe. Some frameworks, like LangGraph, help you build the agent’s logic and keep track of what it knows. Tools like Llama Guard also give you extra safety from harmful content. The models used for this are usually small, fast, and made to check inputs and outputs against a list of safety rules.

You can use a tool like Llama Guard to check an agent’s response before it goes out to the user. It works as an extra checker to spot any policy problems that your main llm might not catch. In the same way, NVIDIA’s NeMo Guardrails adds a layer you can set up to control how your llm acts. This tool helps it stay on topic and follow set paths for conversation.

These tools are valuable because they are:

- Focused: They do one thing well. They are designed for safety moderation. This helps them be fast and spot-on.

- Configurable: You can set them up for your own safety needs and how you want your brand to be.

- Agnostic: You can use many of them in any agentic workflow with any llm you have.

Introducing Modern Techniques: GraphRAG, MCP, and Beyond

There are new ways that help agentic systems be more reliable and smart. One strong method is GraphRAG. It does not see your documents as just separate pieces. GraphRAG makes a knowledge graph. The graph links things, how they relate, and details about them.

This helps the agent answer hard queries that have more than one step. A normal vector search would not be able to do this. For example, it can find answers to questions like, “Which of our suppliers have had both declining performance and rising costs over the last year?” Microsoft’s study showed that GraphRAG gives better answers to these types of queries. But, it may take a little more time to get the answer.

These advanced techniques help to give more clear and complete information. Because of this, they act as a guardrail.

- GraphRAG: This helps cut down on hallucinations. It does this by letting the agent look at data that is linked together, not just read text by itself.

- Self-Correction: A method like Self-RAG lets the model stop and look at its own answers. It checks if its answers are true by looking at the sources it found.

- Multi-agent Collaboration (MCP): The most advanced ways use more than one agent. These agents can look at each other’s work for better validation before doing anything.

Best Practices for Safe and Effective Agentic RAG Deployment

To safely use an agentic RAG system, you need to be careful and think about real-world use first. It is not enough to show a smart demo. You have to build a strong system that can keep working even if something goes wrong. The system should stay within your budget. You also need to make sure you can always check what the system did. Security teams and developers should always use best practices. This is very important for a safe and good start.

This means you need to focus on a systematic evaluation. You should also set up strong oversight to have full control. Make sure you know about your agent’s every action. These steps help turn a high-risk project into one that is steady and people can trust.

Policy Enforcement and Human-in-the-Loop Oversight

One good way to work is to use both automated policy checks and some human review. A person can’t review everything. But they should look at the things that matter the most. This helps make sure big or risky decisions are handled well and keeps people safe.

Set some clear rules for your agent. For the workflow, you can have a rule that says any refund over $100 needs a person to look at it first. The agent does all the research and gets everything ready. But, the last step does not happen until someone approves it. This helps follow the rules and stops big mistakes, but it does not slow down the whole workflow.

Key elements of this approach include:

- Interruptible Workflows: You can use tools like LangGraph that let the process stop at a certain point. The process can wait for outside input before it goes on.

- Clear Escalation Paths: If the agent cannot find an answer or feel sure, there needs to be a clear way to pass the question to a real person who knows more.

- Abstain Policy: The agent should know when it does not have the right answer to give. Forcing this agent to reply to every question will lead to hallucinations.

Monitoring, Tracing, and Auditability in Production Systems

In a workflow, normal logs do not give you enough help if an agentic system fails. A log that only says “Error at step 3” does not tell you what went wrong. What you really need is tracing. Tracing shows you each step in the agent’s whole decision-making path for one query. This way, you get a clear look at what happens as the system works.

Tools like LangSmith and Arize Phoenix give you deep insight. A trace lets you see the query, the tools used, what documents were pulled up, the token cost for each step, and why certain choices were made. This kind of detail helps turn hours of guessing into just a few minutes of focused checking.

Having a clear record of every action is also very important for following rules and making sure things are safe. The need for this is even stronger in spaces where laws and rules matter a lot. You need to keep a lasting track of what your agent does. Tracing helps create this record. It lets you look at choices made in the past. This way, you can show others, like auditors, that you are following the rules. You can also spot patterns that might show a bigger problem in the system.

Conclusion

As enterprises increasingly adopt agentic RAG and autonomous AI systems, strong governance, guardrails, and control mechanisms are essential. While these technologies unlock major efficiency gains, they also introduce serious operational and security risks if left unchecked.

By implementing action-based guardrails, budget limits, and human oversight, organizations can safely harness the power of agentic AI while maintaining trust and compliance. To stay ahead in this rapidly evolving space, continuous learning and proactive risk management are key.

If you’d like expert guidance on deploying secure agentic AI solutions, feel free to reach out—we’re happy to help.

Contents

Frequently Asked Questions

Most frequent questions and answers

Standard guardrails for an LLM help to block harmful text from going in or out. Guardrails for agentic systems do even more. They control what actions the ai can take. For example, these guardrails can stop access to some tools. They can make sure a human must give validation for high-risk work. They also help stop wrong actions, like using an API without the right okay.

Failures can happen when agents get stuck in endless loops. This can use up all the budget. Sometimes, agents do things they should not, like giving refunds without the needed access. In other cases, bad people trick agents, and they break rules. These vulnerabilities are very risky for security teams. To keep the workflow safe, it needs to be locked down the right way.

Yes, there are tools and frameworks that help make things safer. LangGraph helps keep track of an agent’s state. It stops loops from happening. LangSmith gives tracing so you can check the full work, which adds more safety. Special tools such as Llama Guard help keep outputs safe too. This means security teams have more than one way to protect their agentic systems.